Technology

AI and social media: examples of lagging regulation

Artificial intelligence is perhaps the most striking current example of how incredibly fast change happens. Large language models such as ChatGPT have only been publicly available for two years. They are able to draft manuscripts which read well and may actually be quite sensible, though their factual accuracy remains dubious.

This technology has begun to disrupt the entire media business. It will probably have an impact on daily news as well as novels, on political commentary as well as poetry. These AI programmes operate in many different languages. Some media houses are already using them to produce content.

Large language models, however, are still basically unregulated. Teachers must suspect that students use chatbots for their homework and other assignments. Some lawyers have toyed around with AI and learned the hard way that digital programmes sometimes invent information which they then present as factually true. Copyright issues arise, moreover, because AI programmes are trained with existing literature, the authors of which may demand to be paid for their intellectual property.

To pass sensible laws regarding large language models, legislators must know more about the impacts. By the time they start drafting laws, some impacts of the new technology, however, will already feel normal and any new regulations may feel like unfair restrictions to some people affected. If, on the other hand, technology is further advanced by the time legislatures finally pass laws, those laws may not tackle some new issues that arise. Citizens may therefore feel let down by policymakers.

Underregulated social media platforms

Social media platforms are another example. The most important ones were launched in the first decade of this century and some even later. They have international reach but are clearly underregulated. To a large extent, what may or may not be said on Facebook, X/twitter or TikTok depends on their owners. The public is thus not entitled to truthfulness or decency.

When Facebook managers discovered that their platform is hazardous to teenagers’ mental health, they decided to stay silent rather than change anything. It is well understood that social media platforms maximise user attention by promoting messages that fan hate, fear and envy. Negative messages, after all, tend to keep people engaged. They are socially harmful too, especially when they are excessive and not rooted in reality.

The political impacts of social media platforms have changed dramatically over the years, moreover. When the Arab Spring uprising rocked many countries in North Africa and the Middle East, even toppling dictators in Egypt and Libya, people spoke of “Facebook revolutions”. At the time, Facebook allowed open and unsurveilled debate. Today, algorithms are designed differently, reining in the spread of potentially controversial content the owners do not like, for example. The platforms play an important role in public discourse, without being regulated as stringently as conventional media.

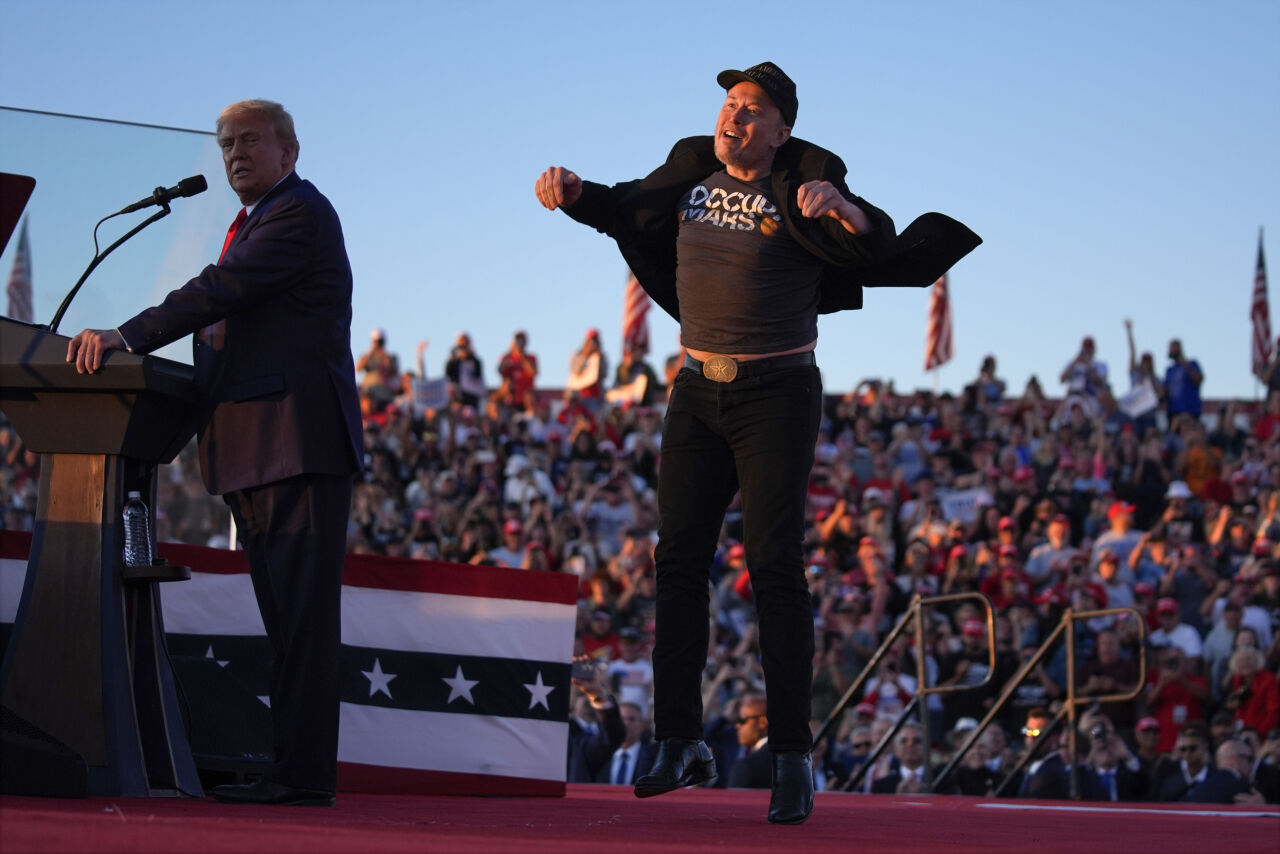

Those regulations, by the way, are geared to ensuring good faith contributions, not to suppressing opinions. They serve to ensure that some person is responsible for any message published. US media law does that too, but social media are exempted, so it is possible to let automated bots spread fake news without users noticing that they are not receiving messages from a real person. Since many of the most important platforms are based in the States, the looseness of US law has international repercussions. Elon Musk, the world’s richest person, bought Twitter, reduced content-moderation efforts and renamed the platform X. Reactionary hate speech and aggressive right-wing posts have multiplied since Musk took over, undermining good-faith democratic discourse and supporting politicians with autocratic leanings such as Donald Trump in the USA and Jair Bolsonaro in Brazil.

Hans Dembowski is the editor-in-chief of D+C/E+Z.

euz.editor@dandc.eu