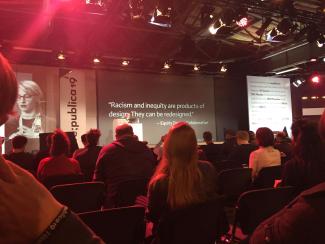

Republica 2019

Technology is no panacea

Currently, only about half of the world population have access to the internet. Experts doubt that the international community will reach the goal of connecting everyone to the web by 2030. In the face of numerous problems, many of those who were technology enthusiasts in the early 2000s have sobered up.

The internet has connected people all over the world. At the same time, it has created a new arena for persisting offline problems. The internet is increasingly being used to spread disinformation, collect masses of personal data and monitor people. It even serves to manipulate elections, organise violence, wage cyberwar and stimulate mass consumption with little regard for the social and ecological consequences.

“Is this the same space we want to connect the other half of the world population to?” asked Nanjira Sambuli, a Kenyan researcher and activist, in May at this year’s Republica, an annual conference tackling digitalisation issues. She focused on the conference motto “tl;dr – too long, didn’t read”, which is internet slang to express that someone found an article excessively long and is replying without having read it.

According to Sambuli, the acronym is indicative of a far-reaching cultural phenomenon. In a time of information overload, attention has become a scarce resource. tl;dr is not a personal attitude, it is a common coping mechanism. “It would take 70 hours to read through the terms and conditions of the most popular internet services,” says Sambuli. “Who has that much time? On the other side, fun and our friends are waiting for us, so we agree.”

The consequences of our actions are coming back to haunt us. According to Sambuli, new forms of exercising power are emerging. Big Data and algorithms serve corporations, governments and non-state actors as innovative means of surveillance, manipulation and control. These technologies are human-made, and therefore they are susceptible to human errors. Nonetheless, their proponents declare them to be fair and objective.

Algorithms are not neutral

Algorithms have become part of our daily lives, offering step-by-step instructions to solving problems and tasks. They are used in the development of artificial intelligence and machine learning (see Benjamin Kumpf in the Focus section of D+C/E+Z e-Paper 2018/10). Artificial intelligence means that computers take decisions that people took in the past. Such abilities can also be used to generate insights from extensive data sets.

Algorithms are changing the world of work. Alex Rosenblat, a Canadian ethnographer, has studied the US-based company Uber, a digital platform for the provision of driving services. Drivers work as independent contractors and use the app to find customers.

According to Rosenblat, they are controlled by an “algorithmic boss”. The app records detailed information concerning their driving behaviour and how many trips they take. Drivers then get suggestions on how to improve their behaviour and make more money. The algorithm is actually a management tool.

Uber unilaterally decides on the prices for rides. Anyone who has poor ratings or refuses too many rides will eventually not get any assignments anymore and lose their job. If, on the other hand, a driver disagrees with a bad rating, it is quite cumbersome to communicate by email with far away Uber customer service agents, not least because they respond with a set of standardised, pre-written answers.

Contrary to much Silicon Valley rhetoric, algorithms, data and digital platforms are not neutral, Rosenblat insists. Uber presents itself as a technology company rather than a transport company. That way it is not bound by labour laws and can avoid payroll taxes. Nonetheless, the corporation micromanages the performance of its supposedly independent service providers. It is in control of the smallest detail. It even experiments with changes in its pricing system without informing the drivers.

Algorithms, moreover, can compound discrimination. Face recognition software is an example. Caroline Sinders, a researcher and artist from the USA, says that such programmes typically do not recognise darker skin tones well. The reason is that the data sets that programmers use to train algorithms are insufficiently diverse. Should such software be used at a national border to decide who is allowed to enter, non-recognition can become a problem.

Sinders adds that some companies have questionable intentions. The Israeli company Faception assigns character traits to facial features with the aim to identify potential terrorists. Here, racism seems to be embedded in the design.

According to Sinders, another problem is that users have no influence on whether and how algorithms affect them. They are exposed, but cannot agree, decline or make changes. They also lack sufficient rights to have their data deleted.

Sinders insists that human rights should always be carefully considered when products are designed. Transparency is the most important requirement. Products should be designed in ways that allow users to understand them, modify them and give feedback.

Enhancing the diversity of development teams leads to better results. Innovation must be geared to serve the most marginalised groups, says Alexis Hope, a designer from the USA. It makes sense to involve everyone affected in the process, she adds. She presented her project to improve breast pumps for mothers of babies. The ideas were developed in a collaborative and inclusive process.

Crisis of democracy

What information users get from search engines and social media is also decided by algorithms. Large data sets about users make it possible to manipulate elections. A case in point was the data analysis company Cambridge Analytica, which became known worldwide for its role in the US election campaign of 2016.

Cambridge Analytica and its partner companies have also been active in many developing countries. Solana Larsen, a Danish-Puerto Rican researcher, and Renata Avila, a Guatemalan human-rights lawyer, have studied such cases in Latin America. One company surveyed members of the poorest communities around Mexico City. Those who answered all questions were given free internet access. They did not know that their data could be abused. Even data of people who are not online can be used for election manipulation.

Cambridge Analytica started operating in Kenya in 2011, influencing the parliamentary elections in 2013 and 2017. According to Nanjala Nyabola, a Kenyan political scientist, many voters were affected by the deliberate dissemination of fake news on social media.

Because of the use of voting computers, the Kenyan election in 2017 became the most expensive in the world, with the equivalent of $ 28 per capita, says Nyabola. Yet, the attempt to foster transparency and credibility through technology failed. For Nyabola, this experience shows that technological solutions are no panacea for political and social problems. What is needed, is an active citizenry and independent media. Otherwise, governments cannot be held accountable.

According to Cory Doctorow, a Canadian author, the underlying reason of many problems is the monopoly position big technology companies have acquired. In some countries, people hardly use any other internet platform than Facebook. That setting increases the opportunities for manipulation. Not only by Facebook itself, but anyone who uses its platform skilfully can take advantage. Around the world, 2.3 billion people access Facebook every month.

According to Doctorow, the major internet platforms have established monopolies. They are increasingly doing things one would normally expect governments to do. For example, they are expected to prevent hate speech or enforce copyright laws. In Doctorow’s eyes, the concentration of power represents a danger to democracy, and the solution would be to break up the monopolies. For example, it should be harder for leading companies to buy up competitors as soon as they emerge.

Monika Hellstern is an assistant editor at D+C/ E+Z, focusing on social media.

euz.editor@dandc.eu

Link

Republica 2019:

https://19.re-publica.com/en